NeuralKG is an open-source Python-based library for diverse representation learning of knowledge graphs. It implements three different series of Knowledge Graph Embedding (KGE) methods, including conventional KGEs, GNN-based KGEs, and Rule-based KGEs. With a unified framework, NeuralKG successfully reproduces link prediction results of these methods on benchmarks, freeing users from the laborious task of reimplementing them, especially for some methods originally written in non-python programming languages. Besides, NeuralKG is highly configurable and extensible. It provides various decoupled modules that can be mixed and adapted to each other. Thus with NeuralKG, developers and researchers can quickly implement their own designed models and obtain the optimal training methods to achieve the best performance efficiently.

Knowledge Graphs (KGs) represent real-world facts as symbolic triples in the form of (head entity, relation, tail entity), abbreviated as (h,r,t), for example, (Earth, containedIn, SolarSystem). Currently, many large-scale knowledge graphs have been proposed, such as YAGO, Freebase, NELL and Wikidata. They are widely used as background knowledge provider in many applications such as natural language understanding, recommender system, and question-answering.

Traditional query and reasoning on KGs are based on manipulation of symbolic representations, which is vulnerable to the noisiness and incompleteness of KGs. Thus, with the development of deep learning, representation learning of KGs has been widely explored, aiming to embed a KG into a low-dimensional vector space while preserving the structural and semantic information contained in the KG. Thus these methods are also called Knowledge Graph Embeddings (KGEs). Flourishing research has been conducted in this area, and different series of KGEs are proposed. Conventional KGEs (C-KGEs) apply a geometric assumption in vector space for true triples and use single triple as input for triple scoring. They ignore the rich graph structures of entities that reflect their semantics. Thus Graph Neural Network (GNN) effective in encoding graph structures are widely applied in KGEs. Based on the input graph, GNN-based methods use representations of entities aggregated from their neighbors in the graph instead of embeddings of them for triple scoring, which help them capture the graph patterns explicitly. Apart from triples and graphs with triples, another essential part of KGs, logic rules, are also considered in KGEs. Rules define higher-level semantics relationships between different relations. Many Rule-based KGEs tried to inject logic rules, either pre-defined or learned, into KGEs to improve the expressiveness of models.

Although there are a lot of KGE methods and authors public their source code, it is difficult for others to apply and compare them due to the difference of programming languages (e.g., C++, Python, Java) and frameworks (e.g., TensorFlow, PyTorch, Theano). Thus several open-source knowledge graph representation toolkits have been developed, including OpenKE, Pykg2vec, TorchKGE, LIBKGE, and DGL-KE. They provide implementations and evaluation results of widely-used and recently-proposed models, making it more easier for people to apply those methods.

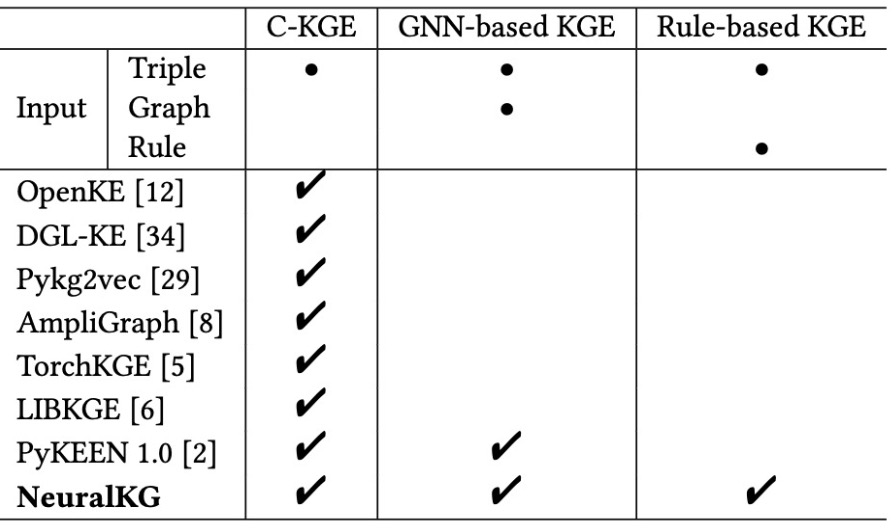

However, most of these toolkits focus on the implementation C-KGEs, and a few of them provide the implementation for GNNbased KGEs, and none of them supports Rule-based KGEs. Thus for a more general purpose of diverse representation learning of KGs, it is necessary to build a toolkit that supports the implementation and creation of all three series of methods mentioned before. While building such a toolkit is non-trivial. These three series of methods are different but not independent. For example, C-KGEs are the backbone of most GNN-based and Rule-based KGEs. Thus, decoupling components should be contained in the concise toolkit to train all methods in a unified framework.

NeuralKG is such an open-source library for diverse representation learning of KGs. We named it NeuralKG targeting at general neural representations in vector spaces for KGs. It supports the development and design of all three series of KGEs, C-KGEs, GNN-based KGEs, and Rule-based KGEs. We summarize the capability of different library in the following table:

NeuralKG is a unified framework with various decoupled modules, including KG Data Preprocessing, Sampler for negative sampling, Monitor for hyperparameter tuning, Trainer covering the training, and model validation. Thus users could utilize it for comprehensive and diverse research and application of representation learning on KGs. Furthermore, we provide detailed documentation for beginners to make it easy to use NeuralKG library. We maintain this website of NeuralKG to organize an open and shared KG representation learning community and will also present long-term technical support to meet new needs in the future.

Avaiable resources about NeuralKG:

- Website: http://neuralkg.zjukg.cn/

- GitHub: https://github.com/zjukg/NeuralKG

- Gittee: To be released soon.

- Document: https://zjukg.github.io/NeuralKG/kgemodel.htm